Predictive modelling often involves a trade-off between model complexity and generalisation. Highly flexible models can overfit, while overly simple models may miss important patterns. Decision stumps—single-level decision trees—offer an interesting middle path when they are not used alone, but combined inside ensemble frameworks. In practice, they are frequently used as “weak learners” that contribute small, incremental improvements to an overall model. This approach is especially useful when building transparent, fast models for business problems that show up in data analytics training in Chennai, such as churn prediction, lead scoring, and risk flagging.

What Is a Decision Stump?

A decision stump is a decision tree with depth 1. It makes exactly one split based on a single feature and a threshold (for numeric features) or a category grouping (for categorical features). For classification, a stump might look like:

- If Feature X ≤ threshold → predict class A

- Else → predict class B

For regression, it predicts a constant value on each side of the split (typically the mean of the target in that region). On its own, a stump is usually too simple to achieve high accuracy. That simplicity is exactly why it works well as a building block in ensembles: it has low variance, trains extremely fast, and is easy to interpret.

Why Weak Learners Work When Combined

Ensembles succeed by combining multiple imperfect models so that their errors do not align perfectly. A single stump might be only slightly better than random guessing, but many stumps—each focusing on different aspects of the data—can form a strong predictor. There are two big reasons this works:

- Error reduction through aggregation: If each learner makes different mistakes, averaging (or weighted voting) can cancel out some errors.

- Incremental learning of patterns: Complex relationships can be approximated as a sum of simple rules. Each stump contributes one small rule; the ensemble becomes a “rule set” that is learned automatically.

This is why stumps are common in real-world machine learning pipelines: they are computationally cheap and provide robust results when combined correctly.

Decision Stumps in Boosting Frameworks

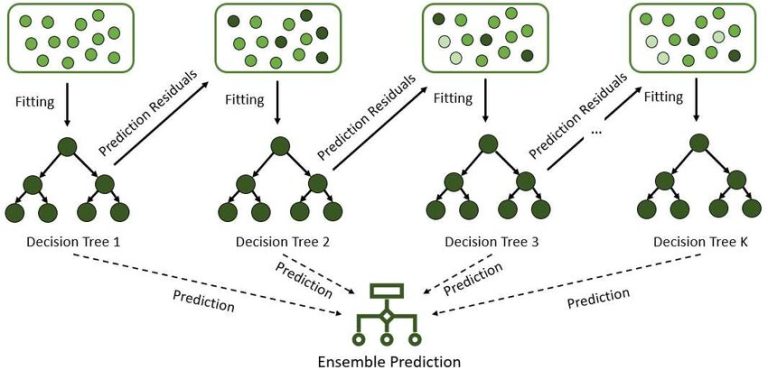

Boosting is the most common home for decision stumps. In boosting, learners are trained sequentially, and each new stump focuses on the observations the previous ones handled poorly.

AdaBoost with stumps (classification)

AdaBoost trains a stump, measures its errors, then increases the weights of misclassified points so the next stump pays more attention to them. Each stump gets a weight based on its performance, and the final prediction is a weighted vote. The effect is that the ensemble gradually concentrates on hard boundary cases.

Gradient boosting with stumps (regression and classification)

In gradient boosting, each stump learns to predict the residuals (errors) of the previous ensemble. The model is built as an additive process:

- Start with a simple baseline prediction

- Fit a stump to the residuals

- Add it to the model with a learning rate

- Repeat

This is how many widely used boosted-tree systems build strong predictors. When stumps are used as base learners, the model can behave like a controlled, step-by-step rule accumulator, which can be helpful for interpretability and stable training in business datasets.

Use cases that often come up in data analytics training in Chennai—like predicting payment default or forecasting support-ticket escalation—can benefit from boosting with stumps when you need strong performance but also want a model you can explain in terms of simple splits.

Bagging and Randomisation: Where Stumps Fit

Bagging (bootstrap aggregating) trains many models in parallel on bootstrapped samples of the dataset and averages their predictions. Decision stumps can be bagged, but because each stump is extremely simple, pure bagging may not add as much power as boosting. Still, stumps can help when:

- You want a lightweight ensemble that trains quickly.

- You need stability against noise and minor sampling variations.

- You want feature-level signals surfaced as repeated “top splits.”

Random subspace methods can also be useful: each stump is allowed to consider only a random subset of features. This encourages diversity across stumps and can reduce the chance that the ensemble becomes dominated by one strong but misleading feature.

Practical Workflow and Common Pitfalls

To use decision stumps effectively in predictive modelling, focus on the following steps:

- Feature preparation:

- Handle missing values consistently, encode categorical variables carefully, and consider monotonic transformations for skewed numeric variables.

- Choose the right ensemble and constraints:

- For maximum performance: boosting (with learning rate, number of estimators tuned)

- For speed and robustness: bagging/randomised stumps

- Regularisation matters: too many stumps with a high learning rate can overfit.

- Tune with validation, not intuition:

- Use cross-validation or a strong holdout set. Key parameters include:

- Number of stumps (estimators)

- Learning rate (for boosting)

- Minimum samples per split (even for stumps, this controls stability)

- Interpretability checks:

- Stumps are simple, but hundreds of them can be hard to explain. Use feature importance, partial dependence, or SHAP summaries to communicate what the ensemble is doing.

A practical mindset taught in data analytics training in Chennai is to treat stumps as “signal detectors”: each stump captures one small predictive cue, and your job is to ensure those cues generalise beyond the training data.

Conclusion

Decision stumps are intentionally weak models, but inside ensembles they become powerful tools for predictive modelling. Their speed, simplicity, and low variance make them excellent weak learners, especially in boosting frameworks where each stump corrects the mistakes of the previous ones. When combined with careful validation and sensible regularisation, stump-based ensembles can deliver strong accuracy while remaining grounded in simple, explainable decision rules—an approach that fits many applied problems covered in data analytics training in Chennai.